Google this year has made some big changes to Assistant, Meet, and its suite of productivity apps. Now, at the company’s Search On event on Thursday, Google detailed several changes coming to some of its other major services, including Google Search, Google Lens, Google Maps, and Google Duplex.

Google Search

Google said Search is making the biggest leap forward we’ve seen in a decade. Already, Google has made major strides in improving the quality of results for search queries. Last year, Google detailed its use of Bidirectional Encoder Representations (BERT), a neural network-based technique for natural language processing (NLP), to train a “state-of-the-art question answering system.” Google now says that BERT is “now used in almost every query in English.”

In addition, Google Search can now better understand misspelled words — which is important, because Google said 1 in 10 queries are misspelled. The new algorithm uses a deep neural net that will allow Search to better decipher misspellings, so even when a word isn’t spelled correctly, Search will understand the context and suggest a correction.

Google is also making Search better at providing users with more specific information. When you search for something, rather than the info you need being buried deep in a web page, Google Search will display individual passages from pages. “By better understanding the relevancy of specific passages, not just the overall page, we can find that needle-in-the-haystack information you’re looking for,” Google said.

Google said it’s now able to identify key moments in videos, too. Using AI, it can highlight specific moments in a video, allowing viewers to navigate them like chapters in a book. Google said the feature will come in handy when you’re watching a recipe guide or watching sports highlights. This feature has already been in testing, but Google expects that 10% of Google searches will use this technology by the end of this year.

Google Search is also getting a deeper understanding of subtopics around an interest, in order to deliver more diverse content when searching for something broad. As an example, searching on Google for “home exercise equipment” will surface relevant subtopics like budget equipment, premium picks, and small space ideas. These will be shown on the search results page as cards, but the feature is not live yet and will roll out by the end of this year.

When your search result involves statistical data, Google will now attempt to map your search to “one specific set of the billions of data points” from the Data Commons Project, a database of statistical data made in collaboration with the U.S. Census, Bureau of Labor Statistics, World Bank, and other organizations. If you ask Google for data on how many people work in Chicago, for example, you’ll see an easy-to-understand visual with the right stat as well as other relevant data and context.

One of the most exciting new features in Google Search is called “hum to search”, and it lets you search for a song by simply humming the tune. To access this feature, open the Google App, tap the Google Search widget, or call up the Google Assistant, and then tap the mic icon and ask “what’s this song” or select the “search a song” button. Then, start humming the tune for 10-15 seconds. Google will then process your hums and run it through a machine learning algorithm to identify potential song matches. You don’t need to have perfect pitch since Google Search will show you a list of likely matches from which you can select the best match.

Once you pick a match, you’ll be able to explore relevant information about the song and artist, see the accompanying music video (if any), find lyrics, start listening to it on supported music apps, and more. This feature is available in English for iOS users and in more than 20 languages on Android. If this feature seems like black magic to you and you’re wondering how it even works, you can read Google’s high-level overview of how Google Search can recognize melodies. What’s interesting is how Google cites this work as an extension of the Pixel’s Now Playing feature, which debuted on the Pixel 2 back in 2017.

Lastly, Google is launching Pinpoint, a new tool for journalists that sifts through documents online and automatically identifies and organizes them by the most frequently mentioned people, organizations, and locations.

Google Maps

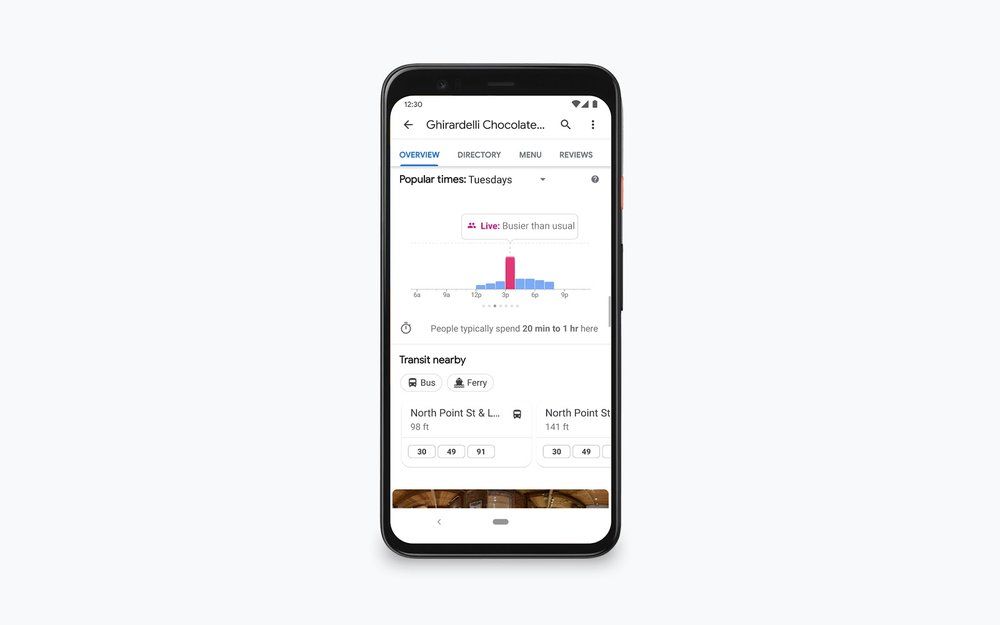

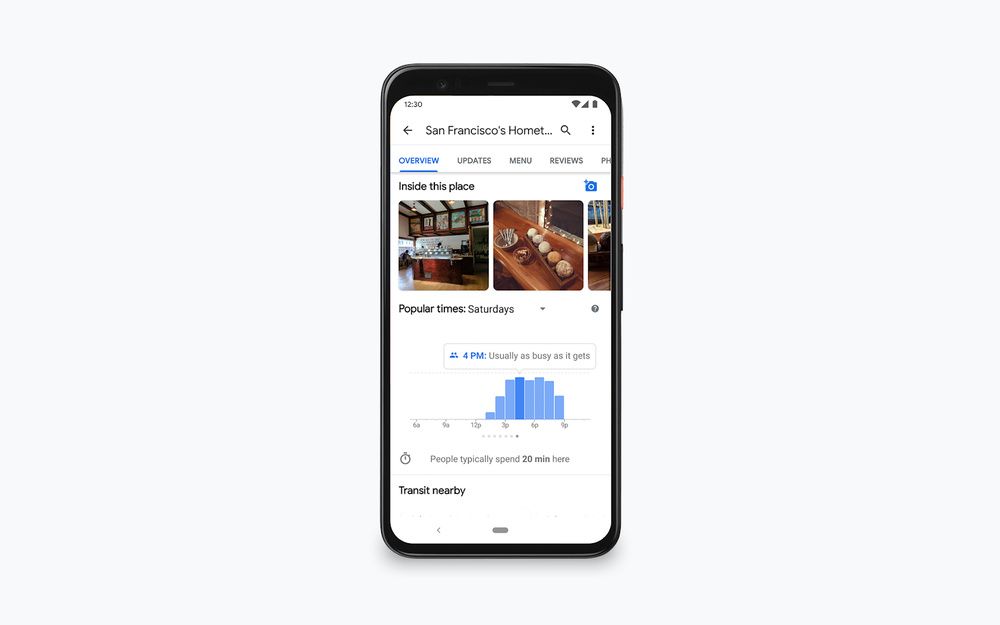

To help people navigate and stay safe during the ongoing COVID-19 pandemic, Google is expanding its live busyness feature in Google Maps. The feature builds on the busyness information Google added to Maps back in 2016, which used historical data to tell people how busy a place might be at a given time.

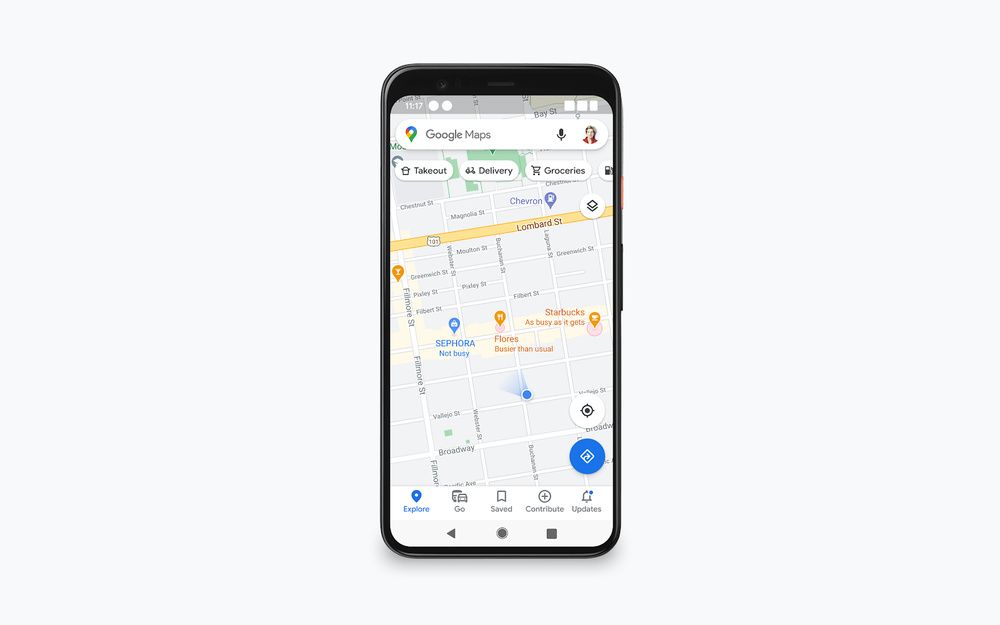

Live busyness will increase global coverage by five times compared to June 2020, giving people all around the world the data to plan their trips to the store. The expansion will include more outdoor areas and essential places, including grocery stores, gas stations, and laundromats. Busyness information will also show in directions and right on the map, so you can get an idea of where crowds are in your area.

In addition, even if historical data shows a place isn’t busy on a certain day, live busyness can still reflect when a place is busy, like when an ice cream shop is giving away a free scoop on Tuesday. Live busyness updates are coming soon to Android, iOS, and desktop users around the world.

Next, Google will show more important health and safety information about businesses in Google Maps and Google Search results. A Health & Safety page will show whether an establishment requires reservations, has a mask mandate, whether they check temperatures, etc. This information comes directly from the businesses themselves and is regularly updated by Google using its Duplex conversational tool to call businesses.

Live View, an augmented reality view of the world around you within Google Maps, can now show you if a place is open, how busy it is, what its star rating is, and if any relevant health and safety information is available. All you have to do is point your camera at the location to get all of this data.

Maps - Navigate & Explore (Free, Google Play) →

Google Lens

Google Lens has always been a fantastic tool for identifying plants, animals, landmarks, and more. Recently, the tool even became a lifesaver for students who need help solving a homework problem.

Now, Google is making Lens even handier for shopping. Users will be able to find items of clothing just from a picture by tapping and holding on the image in the Google app or Chrome for Android. Google says Lens uses Style Engine technology to combine their database of products with millions of style images.

In addition, Google Lens will also bring new car models into the real world, giving people a better idea of what it’s like outside of a showroom. You’ll be able to see what the car looks like in different colors, zoom in to see intricate details, view the car against different backdrops, and experiment with seeing it in your driveway. Google is working with auto brands like Volvo and Porsche to bring their cars to life in AR.

Google Lens (Free, Google Play) →

Google Duplex

Finally, Google is making improvements to Duplex. As previously mentioned, Google said it has been using Duplex to call businesses to update their listings on Google Maps and Google Search to more accurately represent their status under COVID-19 lockdown restrictions. That means details regarding hours of operation, whether takeout is offered, and if there’s no-contact delivery are being kept up-to-date. This is all being done without a business having to manually update their info.

Google Duplex is also being applied to the web to make arduous tasks a little easier. Using Google Duplex in Chrome, users can quickly complete tasks that would otherwise take up to 20 steps to complete, like renting a vehicle or buying a movie ticket. Google said these same features will soon come to shopping and ordering food for a faster, smoother checkout experience.

In addition, Google highlighted how the company recently started rolling out automated salon appointments for Duplex on mobile, and how the new Hold For Me feature on the Pixel 5 makes use of Duplex to wait on the line for you.

Google Assistant - Get things done, hands-free (Free, Google Play) →

We weren’t expecting much from Google’s Search On event given that Google only teased it earlier this week, but the company has surprised us by announcing a plethora of new features coming to Google Search, Google Maps, Google Lens, and Google Duplex. What do you make of all these new features?

The post Google details new features coming to Search, Lens, Maps, and Duplex appeared first on xda-developers.

0 comments:

Post a Comment